🌐High-Impact Practices 2.0: Designing, Measuring, and Predicting Student Success

👋 Introduction

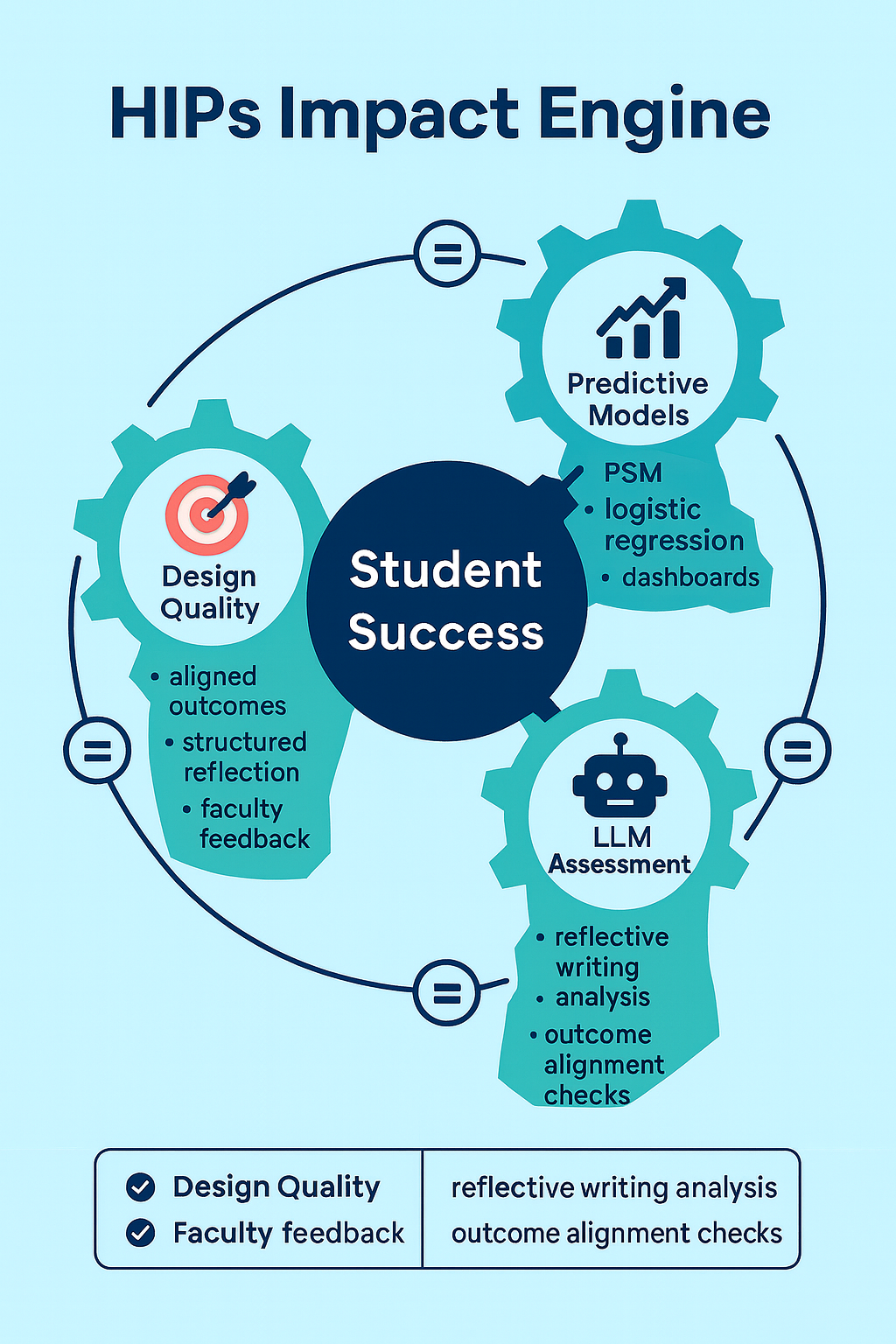

High-Impact Practices (HIPs) have long been celebrated as the “secret sauce” of deep learning—think global intercultural programs, undergraduate research, and service-learning. But as campuses face tighter budgets and bigger data expectations, the conversation is evolving. It’s no longer enough to offer HIPs; we need to design them well, measure their quality, and predict their impact on student outcomes.

This week, we’ll explore how faculty and administrators can raise the bar on HIPs: designing with intention, assessing quality with data and rubrics, and even using predictive models—including Propensity Score Matching (PSM) and Large Language Models (LLMs)—to forecast and scale impact.

💡 Best Practices & Tips

| Focus Area | Practical Steps | Watch Out For |

|---|---|---|

| 1️⃣ Design With Purpose 🎯 | – Link HIPs explicitly to Program & Course Learning Outcomes. – Use backward design to define desired skills (e.g., intercultural competence, critical thinking) first. | HIPs that feel “cool” but don’t connect to curriculum or outcomes. |

| 2️⃣ Quality Matters: Define “Done Well” 🌟 | – Apply AAC&U HIP Quality Dimensions: significant investment of time/effort, rich feedback, meaningful interactions, real-world relevance, and periodic reflection. – Use structured reflection prompts to reinforce learning. | Counting participation only (e.g., “200 students did service-learning”) without examining quality or learning depth. |

| 3️⃣ Predict and Prove Impact 📊 | – Combine Propensity Score Matching (PSM) to isolate HIP effects on retention and graduation. – Use institutional predictive models (e.g., logistic regression, machine learning) to spot at-risk students and optimize HIP access. | Assuming HIPs benefit all students equally without controlling for selection bias. |

| 4️⃣ Harness LLMs for Assessment 🤖 | – Use AI to analyze reflective essays or project reports for evidence of critical thinking or civic engagement. – Deploy LLMs to evaluate alignment between HIP activities and intended learning outcomes. | Relying on AI outputs without human review or ethical safeguards. |

| 5️⃣ Equity First ⚖️ | – Disaggregate participation and outcomes by demographics (race, income, first-gen). – Provide micro-grants or flexible formats to reduce barriers to access. | Assuming a single HIP model fits all student groups. |

💡 Quick win: Start with one HIP (like service-learning), map every activity to CLOs/PLOs, and use LLMs to scan syllabi for outcome alignment before scaling.

🏫 Example/Case Illustration

Case: Predictive Modeling Meets Service-Learning

A mid-sized metropolitan university wanted to know whether service-learning truly improved student retention and long-term success—or if the most motivated students were simply self-selecting.

Step 1: Define “Done Well”

Faculty redesigned service-learning courses to meet five AAC&U quality criteria. Each course required a semester-long community partnership, structured reflection journals, and faculty feedback loops.

Step 2: Build Predictive Models

The analytics team gathered five years of student records, including demographics, prior GPA, and financial-aid status. They used Propensity Score Matching (PSM) to create comparable groups of service-learning and non-service-learning students.

Step 3: Integrate LLM Analysis

An in-house LLM was trained to read and code student reflections for evidence of problem-solving and civic engagement, creating a “learning depth” metric that supplemented grade data.

Results:

- PSM revealed a 7% higher second-year retention for students in “done well” service-learning courses compared to matched peers.

- LLM-coded reflections showed a 25% higher rate of advanced problem-solving skills (Bloom’s level “analyze” or higher).

- Equity audits revealed that targeted micro-grants doubled participation among Pell-eligible students.

Faculty shared findings in a campus-wide showcase, and the university’s strategic plan now includes a goal to scale HIPs with documented predictive impact, not just participation counts.

This case underscores how design quality + predictive modeling + LLM insight can transform HIPs from inspiring stories into evidence-based engines of student success.

🧭 Closing

The HIP conversation is maturing. The new question isn’t Do you offer service-learning or undergraduate research? but Are those experiences well-designed, equitable, and predictive of success?

Forward-thinking institutions are:

- Embedding AAC&U quality dimensions into course planning.

- Using predictive analytics and PSM to measure true impact.

- Leveraging LLMs to analyze learning depth and outcome alignment at scale.

This integrated approach helps universities move from anecdotes to evidence, from participation counts to proven transformation.

👉 Next week: We’ll shift gears to Designing High-Impact Practices That Work—we’ll look at how to design HIPs that not only sparkle in theory but also actually move the needle on student success.

❓ Question of the Week

Which element of the HIP Impact Engine—Design Quality, Predictive Modeling, or LLM Assessment—would most strengthen your campus initiatives if you focused on it this semester?